-

ClsMix: Hybrid (or mixed) classification of images

ClsMix: Hybrid (or mixed) classification of images

Direct access to online help: ClsMix

Access the application from the menu: "Tools | Image classification | Hybrid (mixed) classification"

Presentation

ClsMix is an application for the hybrid (or mixed) classification of remote sensing images. The adjective hybrid (or mixed) is used because the classification method combines elements of a supervised classification (the training areas) with elements of an unsupervised classification (the results of the latter).

In this way, starting from an image in which there are several training areas and an image produced through an unsupervised classification method, a new classified image is created. It is suggested the MiraMon IsoMM application for the unsupervised classification method.

The image with the classes that have been obtained by unsupervised classification must be of the byte, integer or unsigned integer types, regardless of whether it is compressed or not. The unsupervised classes will begin with a value of 1, reserving the value of zero in case there are image pixels that have not been classified.

The image with the training areas must be of the same type and resolution as the image produced through unsupervised classification, and must be byte, integer or unsigned integer, regardless of whether it is compressed or not. The classes of training areas must be numbered consecutively from the number 1 (the zero value will be for all the pixels of the image that are not in any training area).

The application will create a text file in which, for every thematic or informational class (group of training areas which are samples of one kind of cover), the proportions of the different spectral classes that represent it (obtained from unsupervised classification) will be given (see below for an explanation of representativity). Moreover, the proportions of the different thematic classes to which each spectral class could contribute will also be given. If there are several different thematic classes are possible, the application will select that which has the greatest frequency.

Using a number of frequency thresholds given by the user (fidelity and representativity), each spectral class will be linked to a corresponding thematic class or left as unclassified. With these results, the image produced by this hybrid (or mixed)classification will be created. Unclassified pixels will be given a value of 0, being labeled as NoData.

Key concepts of the classifier:

- FIDELITY of the spectral class to the thematic (or informational) class: the minimum proportion by which a spectral class is within the thematic class to which it will ultimately be assigned. The proportion is expressed in relation to the spectral class. In order to understand this concept, it is necessary to know that each spectral class will ultimately be assigned to the thematic class which the crossing of images shows it most frequently corresponds to. However, it may not be desirable for this assignation to take place if the thematic class with maximum frequency of correspondence only contains 20 % of the pixels of the spectral class. It should normally be a number between 0.5 and 1, but with 1 it is unlikely that the spectral class will be assigned to any given thematic class since the demand is too high. A 0.5 implies that a spectral class can be assigned to a thematic class when only half of the pixels of the first spatially coincide, in the training areas, with that thematic class; you can reduce this value, but note that values progressively lower than 50 % imply an increasingly weak relationship. With values closer to 1 (for example 0.8) the assignment (fidelity) is more robust, but more pixels will remain unclassified.

- REPRESENTATIVITY of the spectral class in the thematic class. This is the minimum proportion of the spectral class within the thematic class. For example, it is possible to indicate that a spectral class does not deserve to belong to any thematic class if it displays a correspondence of less than 0.01 % with the thematic class with which it has the highest correspondence: the spectral class is so unrepresentative that it is not worth taking the risk of using it. It must normally be a very low number, unless one is very sure of a biunivocal correspondence between thematic and spectral classes.

When establishing the correspondences it is possible to choose to weight in 3 different ways:

- Without weighting: Ratios between spectral and informational classes are calculated "naturally", without any modification.

- With weighting: Ratios between spectral and informational classes are weighted based on the surface (typically number of pixels) of each training area. With this mode, if an informational category has a lot of surface, its proportion of belonging will increase as much as the proportion of that category in the training areas. If all categories are equally represented in the training areas, this mode is equal to the unweighted mode.

- Use of conditional probabilities: It acts like the "with weighting" mode, but the weighting is not obtained from the relative area of the different categories in the training areas, but the user indicates these weights, forcing them. In other words, the area of the different categories in the training areas is not important, but the indication of which categories we think should be more likely to be found in the final map is done through a list of expected frequencies approximations (per unit bases).

More information can be consulted at the following references:

Serra P, Moré G, Pons X (2005) Application of a hybrid classifier to discriminate Mediterranean crops and forests. Different problems and solutions. XXII International Cartographic Conference (ed.) Mapping approaches into a changing world CD-ROM. (ISBN: 0-958-46093-0) La Coruña.

Moré G, Pons X, Serra P (2006) Improvements on Classification by Tolerating NoData Values - Application to a Hybrid Classifier to Discriminate Mediterranean Vegetation with a Detailed Legend Using Multitemporal Series of Images. IEEE Press Vol. I, 192-195. DOI 10.1109/IGARSS.2006.582. ISBN 0-7803-95-10-7 IGARSS06: International Geoscience & Remote Sensing Symposium - Remote Sensing: A Natural Global Partnership. Denver (Colorado).

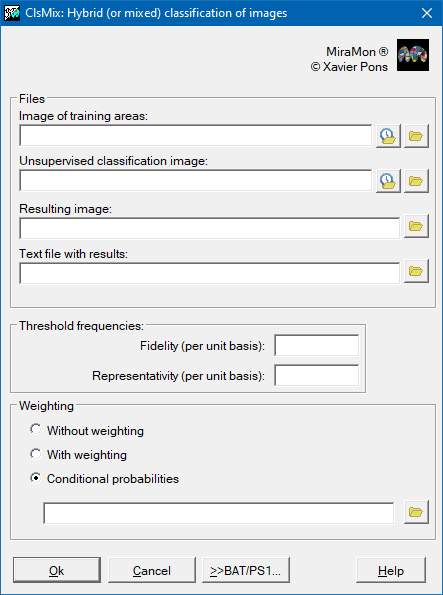

Dialog box of the application

Syntax

Syntax:

- ClsMix TrainingImage UnsupervisedClsImage ResultingImage TextResultats Fidelity Representativity Weighting

Parameters:

- TrainingImage

(Training image -

Input parameter): Name of the image with the training areas (without extension).

- UnsupervisedClsImage

(Unsupervised Cls image -

Input parameter): Name of the image produced by unsupervised classification (without extension).

- ResultingImage

(Resulting image -

Output parameter): Name of the image to be created (without extension).

- TextResultats

(Text results -

Output parameter): Name of the text file with the results (with extension, if desired; .txt is commonly used).

- Fidelity

(Fidelity -

Input parameter): Fidelity of the spectral class to a thematic class, in per one percentage.

- Representativity

(Representativity -

Input parameter): Representativity of the spectral class in the thematic class, in per one percentage.

- Weighting

(Weighting -

Input parameter): Possible frequency weighting of the thematic classes according to the number of pixels of each training area. Options:

- 0: Without weighting

- 1: With weighting

- 2: Weighting by conditioned probabilities, which should be in a text file which will be passed as an argument in the command line (2 columns, the first with the code of the thematic class, and the second with the frequency)